Fine-Tuning Vision Language Models

Introduction

Navigating the evolving landscape of artificial intelligence brings us to the sophisticated use of Vision Language Models (VLMs). Typically, AI tools, including Large Language Models (LLMs) and VLMs, have broad applications but often fall short of aligning closely with a company’s unique brand identity or operational needs.

However, imagine the potential of an AI that serves general functionalities and also embodies your company’s distinctive style and ethos.

At ARThink AI, we specialize in fine-tuning VLMs using proprietary data. This ensures our AI solutions perform tasks efficiently and align closely with your brand’s narrative and style, leveraging insights from your personal data collections.

This blog will explore the intricate process of customizing a VLM to your specific requirements, detail the operational steps involved, and discuss the various applications and benefits of deploying a personally tailored VLM in different industries.

From healthcare to e-commerce and education, the scope is vast and promising. Join us as we delve into the transformative world of fine-tuned VLMs.

Understanding the Use Case of Fine-Tuning VLMs on Proprietary Data

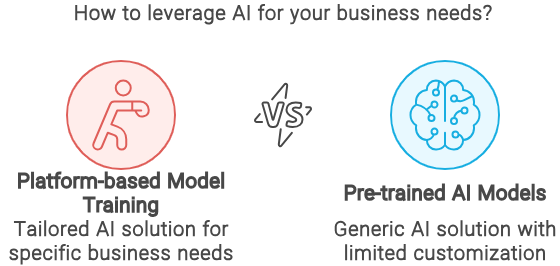

The concept of platform-based model training

In today’s tech-driven world, leveraging artificial intelligence tailor-made to fit specific business needs represents a tremendous advantage. The cornerstone of such a personalized approach is the concept of platform-based model training, which allows businesses to upload their proprietary data onto a platform.

This process facilitates the creation of a highly specialized Vision Language Model (VLM). The principle here is straightforward yet profound: a platform where businesses can directly interact with the AI’s learning mechanism, guiding it through the nuances of their specific visual and language data.

Imagine a system learning directly from a company’s unique dataset comprising images, descriptions, or other media relevant to their industry. This capability not only enhances relevance but also amplifies the effectiveness of the AI application in real-world scenarios.

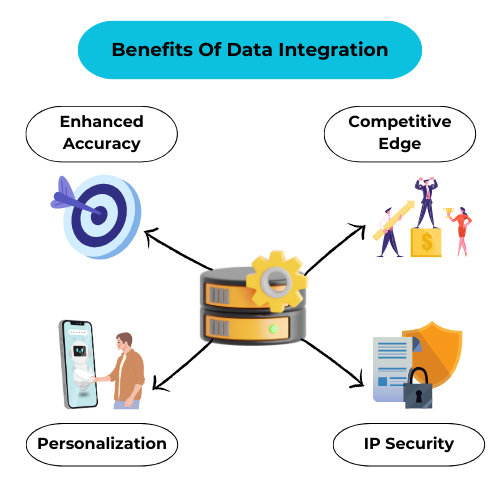

Benefits of custom data incorporation

Fine-tuning VLMs on proprietary data opens the door to a broad spectrum of benefits:

Personalization:

Models fine-tuned with proprietary data understand and reflect the business’s unique attributes and style.

Enhanced Accuracy:

With data that reflects specific cases or scenarios, the model’s ability to interpret and respond to similar future situations is markedly improved.

Competitive Edge:

Tailored models can provide insights and automation capabilities that generic models cannot, offering businesses a competitive advantage in their niche.

Intellectual Property Security:

Using in-house data ensures that sensitive information remains secure and proprietary technology is not exposed externally.

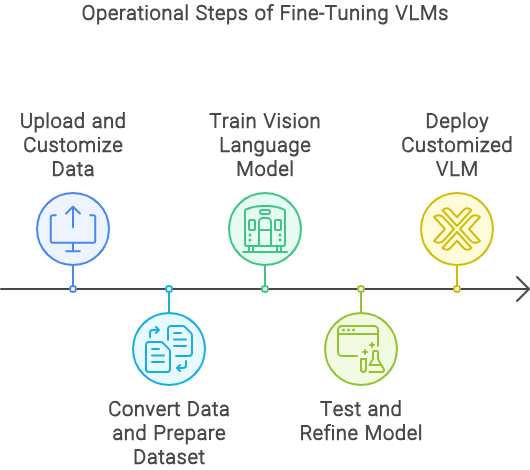

Operational Steps in Fine-Tuning VLMs

Data upload and customization

The first step in the fine-tuning process involves the uploading and customization of data. Users can upload various types of images – whether product photos, medical images, or any visual relevant to their field.

During this stage, special attention is given to the customization of prompts or questions tied to these images, which helps in making the data more personalized and relevant. For example, instead of a generic prompt like “Describe this image,” a medical technology company might use “Identify the medical device shown in this image.”

Data conversion and dataset preparation

Once the data is uploaded, the next step involves converting these images into a format suitable for AI processing. Typically, this involves translating image files into URLs stored in the cloud, ensuring they are accessible for model training. Users then download a CSV file containing columns for the image name, image URL, and the customized image question.

This CSV file is crucial as it serves as a prompt for the AI model, helping it learn how to respond to queries about each image. Following this, the data undergoes a preparation phase, being crafted into a dataset compatible with tools like Hugging Face, setting the stage for efficient model training

Model training and testing phases

With the dataset in the right format, the actual training of the VLM begins. This stage utilizes the prepared data to teach the AI how to accurately interpret and reply to the prompts based on the images it analyzes.

This training process is intensive, requiring significant computational power due to the complexity and volume of the data involved. After training, the model undergoes rigorous testing to ensure it can handle real-world data and scenarios effectively.

The goal here is not just to have an AI that recognizes images but one that can understand context, make connections, and provide useful feedback, mirroring the proprietary style of the dataset it was trained on.

By following these steps, businesses can harness the power of customized Vision Language Models to revolutionize their operations, offering unprecedented levels of interaction, automation, and insight in their respective industries.

Practical Applications of Fine-Tuned VLMs

Healthcare and Medical industry

Vision Language Models (VLMs) finetuned for the healthcare industry can revolutionize how medical data is interpreted and utilized. For instance, VLMs can read and respond to inquiries about X-rays, MRIs, and other imaging data, providing crucial information quickly and accurately.

This capability assists in speeding up diagnosis and improving patient care by enabling swift, informed decisions about treatment options. Moreover, VLMs can help manage and catalog vast amounts of medical imagery, making it easier for healthcare professionals to access historical data for better outcomes.

E-commerce advancements

In the e-commerce space, finetuned VLMs offer a suite of advantages that personalize and enhance the shopping experience. These models can analyze and describe product images, fielding complex customer queries about specifics like size, material, or compatibility.

Imagine a virtual shopping assistant that not only identifies products from images but also provides recommendations based on visual similarities and customer preferences. This level of interaction can significantly improve customer engagement and satisfaction, leading to higher conversion rates and a more dynamic shopping environment.

Educational support

Educational institutions can leverage finetuned VLMs to provide an advanced, interactive learning environment. These models can analyze educational material and generate detailed explanations or answer student queries instantly.

Whether it’s solving math problems from a textbook or providing instant feedback on a science experiment setup, VLMs can act as an ever-present tutor, ready to assist with a wealth of knowledge derived from extensive training datasets. This AI assistance can be particularly valuable in remote learning settings, ensuring that students receive high-quality education, irrespective of their physical location.

Manufacturing optimization

In manufacturing, finetuned VLMs can streamline processes by instantly analyzing images of machine parts to detect anomalies or predict failures before they occur. This proactive maintenance capability can save considerable time and resources, preventing expensive downtime and prolonging machinery life.

Moreover, integrating VLMs into the manufacturing process can facilitate a smoother workflow, as these models can interpret technical diagrams or blueprints, helping in quicker setup or modification of production lines.

IT and code troubleshooting

The ability of finetuned VLMs to interpret and troubleshoot issues directly from screenshots or error images can significantly enhance IT support systems. Developers and IT professionals can use these models to quickly identify issues in code blocks, system setups, or even UI/UX anomalies.

This immediate assistance can drastically reduce resolution times, improving efficiency and user satisfaction. Furthermore, IT departments can deploy these models to assist users by providing instant solutions to common problems, reducing the workload on human support teams.

Challenges in Fine-Tuning VLMs

Data storage complexities

The effective fine-tuning of VLMs requires handling an enormous amount of visual and textual data. Storing such large datasets not only demands extensive physical storage solutions but also efficient data retrieval systems.

This can be particularly challenging for smaller organizations or startups without the requisite infrastructure. Additionally, ensuring the security and privacy of this data, especially when it involves sensitive or proprietary information, adds another layer of complexity.

Computing power requirements

Training and fine-tuning VLMs demand substantial computational resources. The processing power necessary to analyze, learn, and generate content from vast datasets requires advanced hardware, typically involving powerful GPUs or specialized AI processors.

This can lead to high operational costs and may be a limiting factor for entities with limited budgets or access to cutting-edge technology.

The intricacies of prompt engineering

Developing effective prompts for training VLMs is a subtle art. These prompts must be carefully designed to elicit the most informative and accurate responses from the model. Crafting these prompts requires a deep understanding of both the subject matter and the model’s capabilities.

Poorly designed prompts can lead to inaccurate or irrelevant outputs, undermining the effectiveness of the fine-tuned model. This challenge necessitates skilled data scientists and AI specialists, who are often in high demand.

Conclusion

In today’s digital era, the customization of artificial intelligence through fine-tuning Vision Language Models (VLMs) on proprietary data not only preserves your unique business style but enhances your operational efficiency.

The process, as outlined, involves uploading specific image data, converting it for model training, and leveraging this to refine the AI’s understanding and response capabilities. This tailored approach ensures your AI solutions resonate more closely with your company’s unique needs and challenges.

By employing this method, businesses can see significant improvements in various sectors, including healthcare, e-commerce, education, manufacturing, and IT. Each sector benefits from AI’s enhanced ability to interact with visual data, helping companies deliver targeted and effective solutions.

Overcoming challenges like data storage, computing power requirements, and prompt engineering are part of the journey towards creating powerful, customized AI tools that drive better business outcomes.

By integrating fine-tuned VLMs into your services, you not only streamline operations but also set a new standard in personalized AI services, making technology work smarter for your specific needs.

Ready to Transform Your Business with Fine-Tuned Vision Language Models?

Contact us today to learn how ARThink AI can help you leverage the power of customized VLMs to enhance your operations and gain a competitive edge.